A Block Coordinate Ascent Algorithm for Mean-Variance Optimization.

B. Liu*, T. Xie* (* equal contribution), Y. Xu, M. Ghavamzadeh, Y. Chow, D. Lyu, D. Yoon

32nd Conference on Neural Information Processing Systems (NIPS), Montreal, CA, 2018

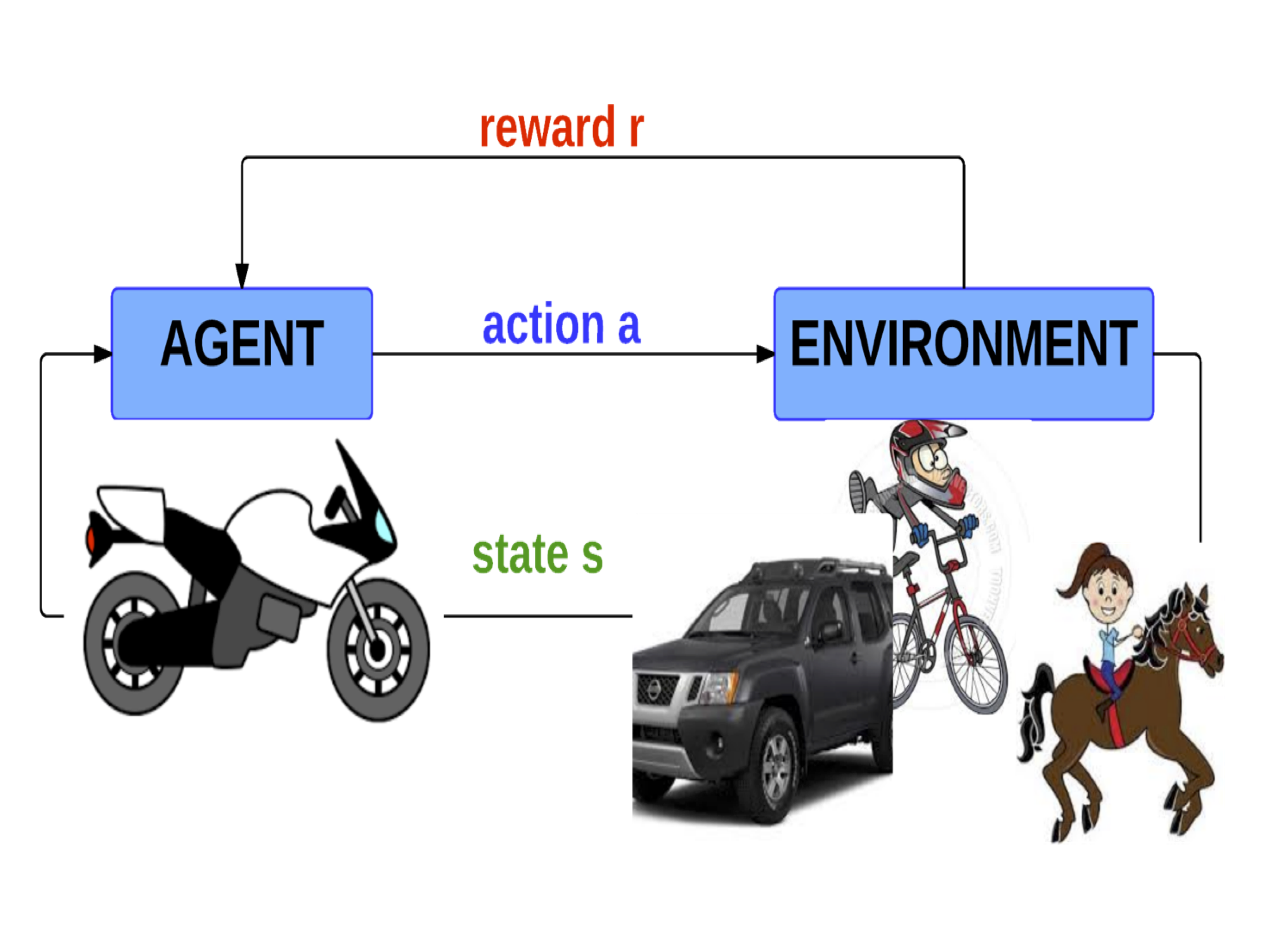

The first risk-sensitive policy search algorithm with single time-scale and sample complexity analysis. It is also the first time introducing coordinate descent/ascent formulation into Reinforcement Learning.

* reads: Co-primary authors with equal contributions. The authorship is in either alphabetic or reverse alphabetic order.